Wastes of cloud spend

With the public and hybrid cloud adoption rapidly growing, enterprises and organizations continue to increase their spending on cloud infrastructure. However, most of the increased spending is not turning into business revenue.

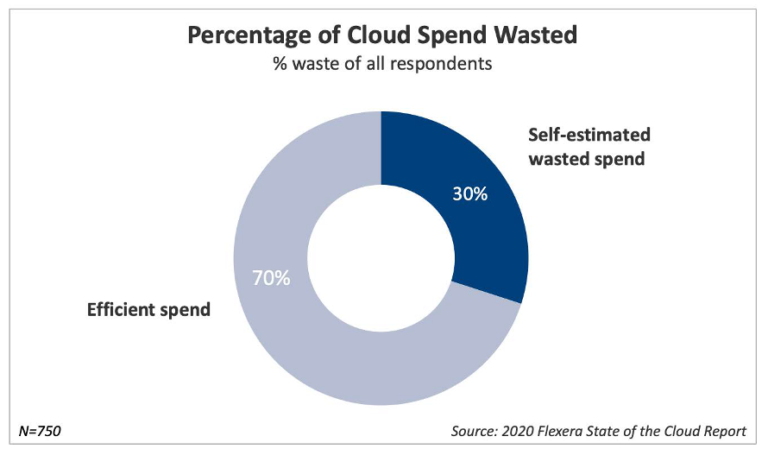

In the recent survey of 2020 State of the Cloud Report [1] by Flexera over 750 technical professionals worldwide, respondents self-estimate organizations waste 30 percent of cloud spend. Flexera has found that actual waste is 35 percent or even higher. The significant wasted cloud spend drives organizations to focus on cost savings. About 73 percent of the organizations surveyed plan to optimize their existing cloud resources to achieve better cost savings. However, most organizations struggle to handle the growing cloud spend due to a lack of resources or expertise [2].

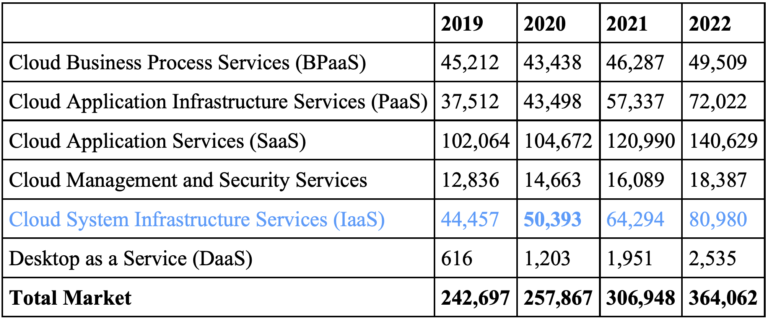

Let’s do some calculations on the 35 percent waste of cloud spend. Gartner recently predicted [3] that the Cloud System Infrastructure Services (IaaS) spending will reach $50 billion in 2020, an increase of 13% from 2019. That means about $17.5 billion of wasted cloud spending in 2020 alone, and this number will keep increasing, given the growth of the cloud. Another study [4] from ParkMyCloud also arrives at a similar conclusion that the Wasted Cloud Spend would exceed $17.6 Billion in 2020.

Just as the title of article [5] by Larry Dignan (Editor in Chief of ZDNet), cloud cost control is becoming a leading issue for businesses.

Figure 1. Percentage of Cloud Spend Wasted

Table 1. Worldwide Public Cloud Service Revenue Forecast (Millions of U.S. Dollars)

BPaaS = business process as a service; IaaS = infrastructure as a service; PaaS = platform as a service; SaaS = software as a service

Source: Gartner (July 2020)

Challenges of reducing cloud spend waste

The pay-as-you-go pricing model of cloud infrastructure services claims that you only pay for what you use. But this is not entirely accurate. To be more precise, you pay for what you allocate. All the resources you allocate for your applications during the period are charged no matter whether you use them or not. The wasted cloud spend comes from the idle resources and the idle resources are from the over-provisioning.

James Beswick bursts the pay-as-you-go bubble in his article “The cloud is on and the meter’s running — avoid the sticker shock of ‘pay as you go’” [6]. He points out the difficulty in understanding cloud billing. A fine-tuning process with a steep learning curve is needed for cost optimization when migrating applications to the cloud.

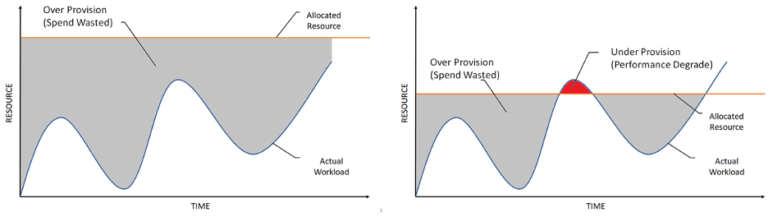

It is almost impossible for most organizations to understand their workload requirements and estimate the needed infrastructure resources before migrating the applications to the public cloud. The easiest and safest way is to take an over-provisioning strategy. In the beginning, an IT department allocates a huge and fixed amount of resources to prevent applications from running out of resources. That leads to a large number of idle resources, which means a significant wasted cloud spend. On the other hand, if an IT department periodically reviews their cloud spend and tries to allocate fewer resources, the lack of visibility and capability to predict the needed resources might lead to under-provisioning. It is futile to allocate a fixed amount of resources to meet a dynamically changing workload, as shown in the figure below.

Figure 2. Over-provisioning leads to wasted spending,

and under-provisioning leads to performance degradation.

Right-sizing is one way to reduce cloud spending. We consider other methods, including running workloads on a cheaper cloud provider or region with the same SLA maintained, selecting low-priced instance types with similar performance, and taking advantage of reserved instances and SPOT instances when possible. However, most organizations don’t have the needed expertise to apply these methods, and the right solution is required to optimize the cost of operation. A well-designed solution can significantly help with cost optimization if it offers visibility and projection of the workload costs and continuously engages and recommends the right environment to run the workloads.

Why Federator.ai can help reducing cloud spend

Federator.ai is an Artificial Intelligence for IT Operations (AIOps) platform that provides intelligence to orchestrate container resources in environments like Kubernetes/OpenShift clusters, either on-premises or in public clouds. Using Machine Learning and other data analytical tools, Federator.ai predicts clusters’ resource usages based on past observations. It captures resource utilization’s dynamic nature throughout the cluster deployment lifecycle and provides the visibility of usage patterns for cluster resource planning. Using this resource usage prediction in conjunction with the price plans of the major public cloud providers (AWS, Azure, and GCP), Federator.ai translates cluster resource usage metrics and forecasts into the most cost-effective and actionable recommendations for right-sizing a cluster.

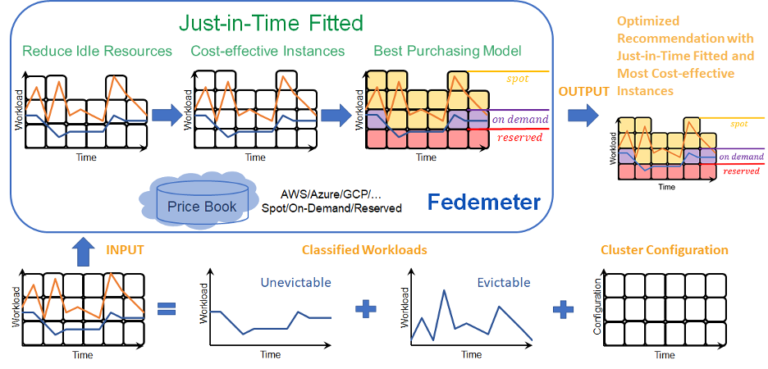

Fedemeter, the patent-pending cost analysis module of Federator.ai, takes the input of current cluster configuration and workload prediction to produce a recommendation of the most cost-optimized cluster configuration for users. The recommendation output is a time series of Just-in-Time Fitted instance size to support application workloads without resource wastes. The most cost-effective instance types with the best purchasing options are recommended, including the possibilities of either switching over to different cloud providers and regions or remaining in the same provider depending on the user’s preference.

Figure 3 shows three main considerations when deciding the recommended cluster configuration. The first is to exam how much idle resources could be reduced. The second is to search for cost-effective instances that meet the expected workloads. The last is to weigh different purchasing options from the users to finalize the recommendation.

Reducing Idle Resources

The observed resource usage metrics (CPU/memory) of application workloads are gathered and classified according to their characteristics, such as SLAs (evictable/unevictable) or their lifetimes (continuous/scheduled/batch). Then Federator.ai’s machine learning algorithms are applied to generate workload predictions in different time granularity. Based on these predicted resource usage metrics, Federator.ai reduces over-provisioned idle resources in the current cluster configuration and translates the metrics into a time series of Just-in-Time Fitted instance size.

Using Most Cost-Effective Instance Types

A cluster configuration is a set of metadata of the underlying instances running on the cloud; it includes cloud provider, region, instance type, storage, size, and purchasing model of each instance. Instances having similar specifications and performance could have very different prices between providers or regions of the same provider.

All cloud providers have their pricing strategies and pricing models. The cost calculation includes complicated formulas and is all different between providers. Also, in other regions, the instance with other specifications, with operating systems and software installed, combing different storage types all have different prices. That makes it a difficult task to determine the most cost-effective instance type and region. The frequent changes in pricing databases’ release due to new deals and new instances, families, or data centers make the situation worse.

Federator.ai automatically and continuously compiles the price books from AWS, Azure, and GCP cloud providers and intelligently searches for the most cost-effective instance types and user region. The typical cost savings from choosing the right instance type in the appropriate region is up to 40%.

Choosing Best Purchasing Models

To maximize the utilization of infrastructure resources, cloud providers usually offer different pricing models for different types of workloads. For example, providers offer a “reserved instance” purchasing option in which users commit to purchasing a fixed amount of resources over one year, whether or not the resources are utilized by the users. Compared to the on-demand purchasing model, the reserved instance price discount typically ranges from 30% to 70%. Also, providers offer “spot” purchasing options for selling their spare infrastructure resources. The discount is even larger, up to 90%, with the risk of the resource being reclaimed on short notice.

Using reserved and spot instances can significantly reduce costs. But not all application workloads are suitable for running on SPOT and reserved instances. By understanding the characteristics (SLA, lifetime, etc.) of application workloads, Federator.ai uses classified workload predictions to recommend the best combination of purchasing models of cluster instances. For example, the application workloads that are evictable will be aggregated and translate into spot instances. On the other hand, the continuous workloads and scheduled workloads in different time frames can be aggregated and supported using reserved instances. For the unevictable workloads with dynamically changing utilization, they are more suitable for using on-demand instances.

Considering predicted idle resources, cost-effective instance types, and different purchasing options based on user preference and application workload characteristics, Federator.ai makes the most optimized recommendations with the best cost savings.

Using Federator.ai for MultiCloud cost savings

There are several use cases where Federator.ai can help customers reduce their cloud spend.

Day-1 recommendation for application workload deployment

For customers planning to migrate their application workloads from on-premises clusters to the public cloud, Federator.ai recommends the most cost-effective cluster configuration based on the workload prediction and comparison of prices from different cloud providers and regions. The recommended instances included in the cluster configuration are also optimized to provide Just-in-Time Fitted resources for supporting application workloads. This recommendation offers organizations excellent visibility about the estimated cost when migrating their applications from on-premises to a public cloud service provider or from one public cloud provider to another. The recommended cluster configuration provides organizations an optimized and executable plan for allocating proper infrastructure resources on the new public cloud.

Day-2 Operation recommendation for cost savings in existing public cloud deployment

If users are already running applications in the public cloud, they can also benefit from Federator.ai’s recommendation for cost savings. With workload prediction, Federator.ai recommends cost-optimized configuration with the same provider and region, and thus the users could consider changing their current configuration and optimize the existing cluster. Users can also explore the purchasing options like reserved instance and spot instance, which can significantly reduce the cost.

Also, combing with the Federator.ai Horizontal Pod Autoscaling and Cluster Optimizer features, Federator.ai intelligently and automatically maintains the most cost-effective cluster configuration and delivers the most optimized application performance for Kubernetes/OpenShift users.

Cost breakdown for cluster namespaces and applications

Each department in an organization can understand its hourly cost of resource usage and the whole cluster’s cost percentage in real-time. The cost already spent in the past and projected costs in the future are presented in the same chart for better illustrating the trend. Using Federator.ai’s public APIs, users can integrate with their own systems for alert notification of potential cost overrun to proper departments.

Conclusion

Reducing wasted cloud spend is an essential task for any enterprise and organization that are running mission-critical applications in a public cloud or considering moving to a public cloud. Federator.ai’s AI-enabled intelligent cost analysis capability simplifies the cumbersome and complicated process of deciding the right cluster configuration with workload prediction. Continuous analysis of ongoing workloads and prediction of future workloads with new recommendations allows users to maintain their cost objectives throughout the deployment lifecycle.

References

- “2020 State of the Cloud Report,” Flexera, 2024. Available: https://info.flexera.com/CM-REPORT-State-of-the-Cloud?id=ELQ-Redirect

- John Engates, “The Cost of Cloud Expertise Report, Rackspace & LSE, 2017. Available: https://www.lse.ac.uk/business/consulting/assets/documents/the-cost-of-cloud-expertise.pdf

- “Gartner Forecasts Worldwide Public Cloud Revenue to Grow 6.3% in 2020,” Gartner Inc., 2020. Available: https://www.gartner.com/en/newsroom/press-releases/2020-07-23-gartner-forecasts-worldwide-public-cloud-revenue-to-grow-6point3-percent-in-2020

- Jay Chapel, “Wasted Cloud Spend to Exceed $17.6 Billion in 2020, Fueled by Cloud Computing Growth,” Medium, Mar. 21, 2020. Available: https://jaychapel.medium.com/wasted-cloud-spend-to-exceed-17-6-billion-in-2020-fueled-by-cloud-computing-growth-7c8f81d5c616

- Larry Dignan, “Cloud cost control becoming a leading issue for businesses,” ZDNET, Mar. 3, 2019. Available: https://www.zdnet.com/article/cloud-cost-control-becoming-a-leading-issue-for-businesses/

- James Beswick, “The cloud is on and the meter’s running- avoid the sticker shock of ‘pay as you go’,” A Cloud Guru, May. 11, 2017. Available: https://medium.com/a-cloud-guru/avoiding-sticker-shock-in-cloud-migration-9ee4c0308182