Introduction

The workloads running in Kubernetes clusters are characterized by their respective Key Performance Index, or KPI. To meet diverse application-specific KPI objectives, Kubernetes implements a generic framework to scale up and down the number of pods of the same characteristics, called Horizontal Pod Autoscaling, or HPA. An HPA controller can perform autoscaling with either standard metric like CPU utilization or a customized metric by this formula: desired replicas = ceiling (current replicas * (current metric / desired target metric)). At first glance, this seems to be a reasonable approach. This white paper shows that this approach is too simplistic to be employed in many applications and will waste resources or decrease performance.

It has been observed that Application KPIs are not necessarily directly related to Pods’ CPU and memory utilization. Scaling up or down the number of Pods based on one generic metric may not result in a good KPI for the underlying applications. For many applications, e.g., Kafka consumer workloads, multiple metrics may be required to determine the right number of consumers to process the Kafka brokers’ messages without incurring too much processing latency of the consumers. The message processing latency can be the KPI for this application. More importantly, most workloads may exhibit predictable behaviors, and explore such actions will further differentiate an application-aware HPA solution versus naive ones.

We also show that by using the application-specific metrics and machine-learning-based workload prediction, ProphetStor’s application-aware HPA provides an over 40% reduction in the number of the required replicas compared to a standard CPU-utilization-based HPA.

It has been observed that Application KPIs are not necessarily directly related to Pods’ CPU and memory utilization. Scaling up or down the number of Pods based on one generic metric may not result in a good KPI for the underlying applications. For many applications, e.g., Kafka consumer workloads, multiple metrics may be required to determine the right number of consumers to process the Kafka brokers’ messages without incurring too much processing latency of the consumers. The message processing latency can be the KPI for this application. More importantly, most workloads may exhibit predictable behaviors, and explore such actions will further differentiate an application-aware HPA solution versus naive ones.

We also show that by using the application-specific metrics and machine-learning-based workload prediction, ProphetStor’s application-aware HPA provides an over 40% reduction in the number of the required replicas compared to a standard CPU-utilization-based HPA.

Application-Awareness

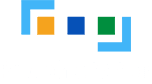

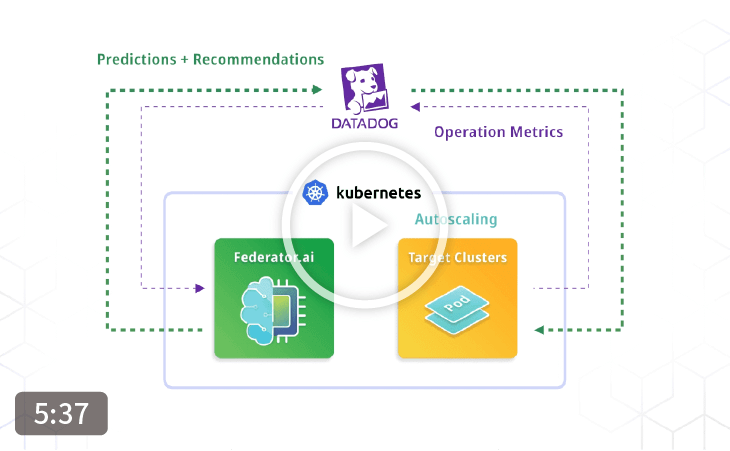

In Kubernetes, the criteria used to perform scaling can be based on the metrics such as CPU utilization defined in autoscaling/v1. The HPA framework has been extended to support Memory and other generic metrics defined in autoscaling/v2beta2 and Watermark Pod Autoscaler (WPA) as defined by Datadog.

With integration with Datadog’s agents running on the nodes, one can collect application metrics such as Kafka’s consumer lags and log offsets in a unified and deployment-proof fashion, where our solution leverages the ease of collecting application metrics enabled by Datadog’s agents and focuses on transforming collected metrics into KPI-aware HPA actions.

With integration with Datadog’s agents running on the nodes, one can collect application metrics such as Kafka’s consumer lags and log offsets in a unified and deployment-proof fashion, where our solution leverages the ease of collecting application metrics enabled by Datadog’s agents and focuses on transforming collected metrics into KPI-aware HPA actions.

KPI for Kafka Consumer Performance

The KPI that we would like to use is “how long a message will stay in Kafka brokers before a Kafka consumer commits it.” In particular, we define this KPI as a queue latency, equal to Kafka’s consumer lags divided by the total consumer message consumption rate. This KPI captures a few essential aspects of Kafka as messaging services. The KPI is better if we increase the number of consumers toward the number partitions, and the KPI is worse if we reduce the number of consumers toward 1 or any minimum number that is allowed.

In a real setup, the Kafka producers inject messages into the Kafka brokers at some variable rates. To satisfy a target KPI, e.g., between 1 second and 5-second latency, one can have multiple choices throughout the lifetime of the changing workload:

In a real setup, the Kafka producers inject messages into the Kafka brokers at some variable rates. To satisfy a target KPI, e.g., between 1 second and 5-second latency, one can have multiple choices throughout the lifetime of the changing workload:

1) Over provision with many consumers such that latency is less than 5 seconds

2) Under provision with too few consumers such that latency is not controllable

3) Adjust dynamically the number of consumers such that a smaller number of consumers can satisfy the target KPI

Option 1 is a typical approach before HPA is available and is costly if over provision too much over an extended period. Option 2 is not feasible since it does not consider the KPI and potentially results in ever-increasing consumer lags. Option 3 is supported by HPA algorithms, including ProphetStor’s application-aware algorithm.Generic HPA

The Kubernetes autoscaling/v1 supports automatic scaling of pods based on observed CPU utilization, which we call it generic HPA. The generic HPA controllers can be configured to scale up or down the number of PODs based on a target average CPU utilization of all PODs in the same replica set. For example, the average CPU utilization of 70% is set as a target. If the average CPU utilization exceeds this target, the HPA controller will increase the number of replicas to bring down the average CPU utilization close to the target. Conversely, the HPA controller will reduce the number of replicas when the average CPU utilization is below the target value.

Like the autoscaling/v1, the newer version autoscaling/v2beta2 extends the supported metrics to additional standard and customized metrics.

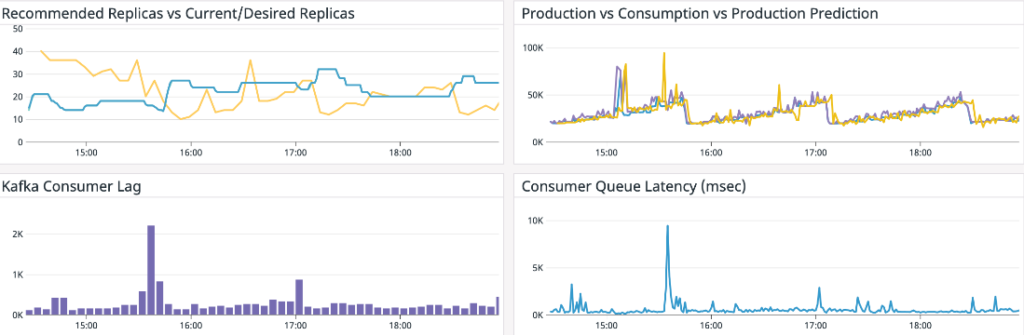

The following shows a run of Kafka producer injecting messages to the brokers, and the consumers consume and commit the messages. The blue line in the top right widget shows the varying message production rate, which is the consumers’ workload. The blue line in the top-left widget shows the replicas recommended by the autoscaling/v1 HPA controller with target CPU utilization set at 70%. For comparison purposes, the yellow line in the same widget is recommended from ProphetStor’s application-aware HPA. Compared to the yellow line recommended by ProphetStor application-aware HPA, the generic HPA uses 40% more replicas to support the dynamically changing workload after initial AI algorithm training time.

The top right widget shows the production rate (change in log offset per minute), consumption rate (change in current offset per minute), and the predicted production rate in yellow color. The bottom right widget shows the consumer latency over time, which is our KPI.

Like the autoscaling/v1, the newer version autoscaling/v2beta2 extends the supported metrics to additional standard and customized metrics.

The following shows a run of Kafka producer injecting messages to the brokers, and the consumers consume and commit the messages. The blue line in the top right widget shows the varying message production rate, which is the consumers’ workload. The blue line in the top-left widget shows the replicas recommended by the autoscaling/v1 HPA controller with target CPU utilization set at 70%. For comparison purposes, the yellow line in the same widget is recommended from ProphetStor’s application-aware HPA. Compared to the yellow line recommended by ProphetStor application-aware HPA, the generic HPA uses 40% more replicas to support the dynamically changing workload after initial AI algorithm training time.

The top right widget shows the production rate (change in log offset per minute), consumption rate (change in current offset per minute), and the predicted production rate in yellow color. The bottom right widget shows the consumer latency over time, which is our KPI.

Kafka consumer’s performance, which determines the number of replicas to support a given KPI, depends on how frequently to poll the brokers, how frequently to commit to brokers, and how fast the consumers can process messages. We can be further characterized the operation into CPU-bound, IO-bound processing, or a mixture of both. In general, it’s challenging to control the application KPI based on the CPU threshold or any generic metric.

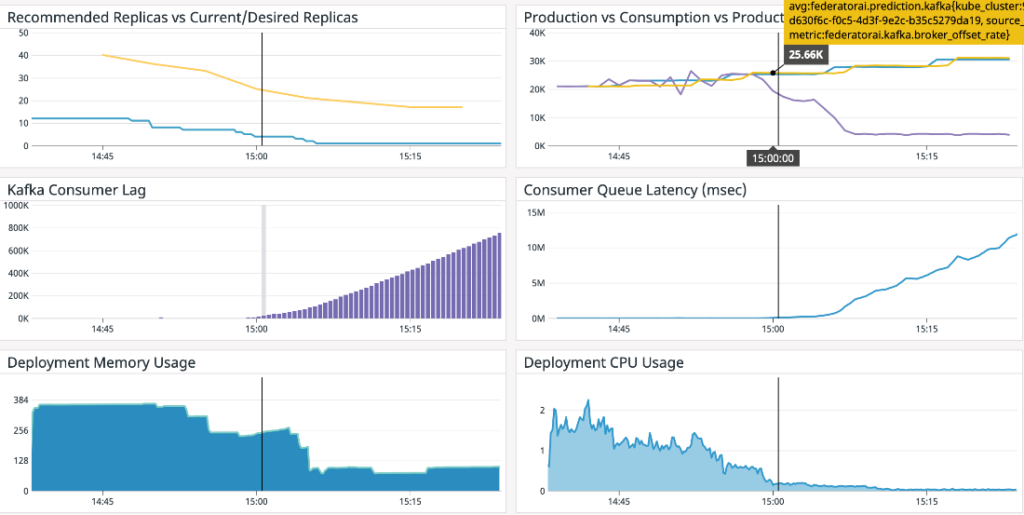

Here is an example of an incorrect setting of HPA autoscaling target CPU utilization to 80%, which leads to the under-provisioning of the consumers with excessive queue latency. This is because the average CPU utilization never exceeds 80%, even with ever-increasing consumer lags in the system. In the top-left widget, the blue line is the number of consumer replicas starting at 10 and eventually drops down to 1. The overall queue latency increases excessively due to under-provisioning.

Here is an example of an incorrect setting of HPA autoscaling target CPU utilization to 80%, which leads to the under-provisioning of the consumers with excessive queue latency. This is because the average CPU utilization never exceeds 80%, even with ever-increasing consumer lags in the system. In the top-left widget, the blue line is the number of consumer replicas starting at 10 and eventually drops down to 1. The overall queue latency increases excessively due to under-provisioning.

ProphetStor’s Application-Aware HPA

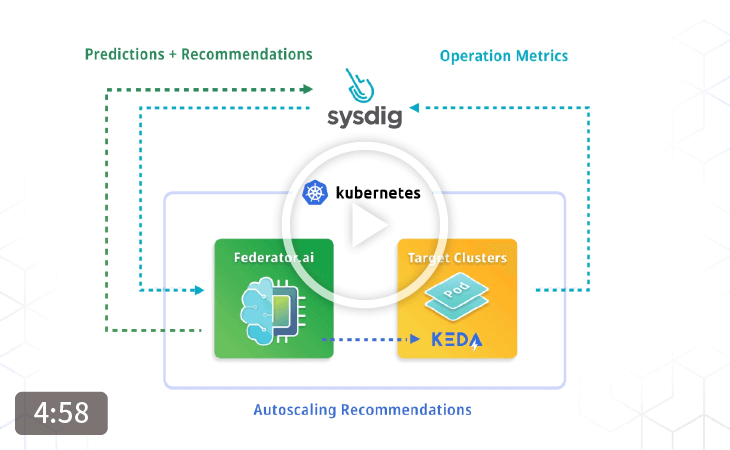

To meet the application-specific KPI, we introduce a class of algorithms to measure and predict the relevant metrics that are tailored to the Kubernetes HPA. Datadog’s agents allow us to effectively collect application metrics and seamless deployment of ProphetStor’s autoscaling recommendation without additional instrumentation.

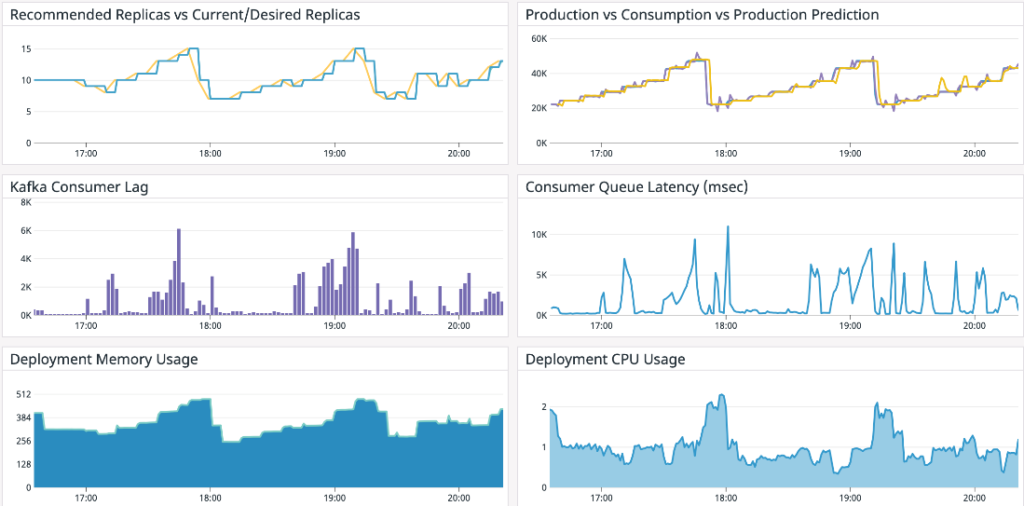

Here is a result of the same workload that we presented earlier. The top-left widget shows the current consumer replicas in the blue color and the recommended number of replicas in the yellow color. The top right widget shows the production rate, consumption rate, and predicted production rate, all in sync. The KPI of consumer queue latency is shown in the middle right widget.

The results demonstrate that the ProphetStor’s application-aware HPA can adjust the number of consumer replicas nicely with the changing production rate while maintaining an average of 1.4 seconds of consumer queue latency. Our target KPI is 6 seconds. The peak number of replicas is 15, and the minimum number of replicas is 7. Compared to the generic HPA, our results require 40% fewer consumer replicas while maintaining a reasonable KPI.

Here is a result of the same workload that we presented earlier. The top-left widget shows the current consumer replicas in the blue color and the recommended number of replicas in the yellow color. The top right widget shows the production rate, consumption rate, and predicted production rate, all in sync. The KPI of consumer queue latency is shown in the middle right widget.

The results demonstrate that the ProphetStor’s application-aware HPA can adjust the number of consumer replicas nicely with the changing production rate while maintaining an average of 1.4 seconds of consumer queue latency. Our target KPI is 6 seconds. The peak number of replicas is 15, and the minimum number of replicas is 7. Compared to the generic HPA, our results require 40% fewer consumer replicas while maintaining a reasonable KPI.

Our Kafka Consumer HPA algorithm is designed to balance the convergence time of estimating the consumer capacity and the target KPI of queue latency while minimizing the number of consumer replicas with changing consumer workloads. The algorithm dynamically calculates the consumer capacity over time to determine how many messages per minute can each consumer handle at the peak loads without sacrificing the KPI, which is the latency of messages staying at the Kafka brokers. Since the estimation of the capacity is performed dynamically for each consumer group, the algorithm can adapt to various environments without operators’ intervention. The only constraint for each consumer group is that the number of max possible consumer replicas in the same consumer group cannot be more than the partitions of a topic.

When determining a reasonable number of consumer replicas to use, we could divide the current production rate by the estimated consumer capacity. The problem with this approach is that it will most likely incur a considerable consumer lag. It means KPI will be outside of our target when there is an increase in the immediate future production rate. To maintain KPI within our target range, we implemented two complemented strategies: use our proprietary machine-learning based workload prediction on the production rates to determine the number of replicas, and slight over provision on the number of replicas. With workload prediction on the production rate, we use the maximum predicted production rates shortly instead of past observations to determine the number of replicas. For slight over-provision, we have a policy of 10% over-provisioning on the number of replicas. In combination, we can maintain the queue latency (our KPI) within our target range while adapting our consumer replicas to minimize the potential of over-provisioning.

When determining a reasonable number of consumer replicas to use, we could divide the current production rate by the estimated consumer capacity. The problem with this approach is that it will most likely incur a considerable consumer lag. It means KPI will be outside of our target when there is an increase in the immediate future production rate. To maintain KPI within our target range, we implemented two complemented strategies: use our proprietary machine-learning based workload prediction on the production rates to determine the number of replicas, and slight over provision on the number of replicas. With workload prediction on the production rate, we use the maximum predicted production rates shortly instead of past observations to determine the number of replicas. For slight over-provision, we have a policy of 10% over-provisioning on the number of replicas. In combination, we can maintain the queue latency (our KPI) within our target range while adapting our consumer replicas to minimize the potential of over-provisioning.

Conclusion

Applications workloads are dynamic. It is challenging to provide the just-in-time-fitted resource/right number of replicas to service the workload throughout its life cycle. Most of the time, the system is over-provisioned with too many replicas/too many resources because the system’s behavior is not clearly understood.

Autoscaling with HPA is a solution to adjust the resources needed to support dynamic workloads automatically. We have shown that CPU utilization is not the right indicator of the KPI used in many real workloads. Furthermore, guessing the proper target CPU utilization to achieve a good HPA while maintaining a good KPI is too complicated and is not feasible, especially when it is dynamic. Although the Kubernetes Native HPA and Datadog’s WPA support other standard metrics and customized metrics, they employ a straightforward scaling algorithm that cannot effectively incorporate multiple metrics.

Using the right metrics for application workloads and machine-learning-based workload prediction, we can achieve a better HPA that optimizes resource utilization and application KPI. Our application-aware HPA intelligently correlates multiple application metrics and KPI targets to derive proper Kafka consumer capacity. ProphetStor’s Federator.ai provides machine-learning-based workload prediction and provides operation plans that adjust the number of replicas dynamically based on the workloads while maintaining the KPI target. We have shown that application-awareness is essential for the proper operation of the Kubernetes’ auto-scaling, and coupled with the understanding of the operating environment and dynamic resource adjustments, we can improve utilization and performance significantly.

Autoscaling with HPA is a solution to adjust the resources needed to support dynamic workloads automatically. We have shown that CPU utilization is not the right indicator of the KPI used in many real workloads. Furthermore, guessing the proper target CPU utilization to achieve a good HPA while maintaining a good KPI is too complicated and is not feasible, especially when it is dynamic. Although the Kubernetes Native HPA and Datadog’s WPA support other standard metrics and customized metrics, they employ a straightforward scaling algorithm that cannot effectively incorporate multiple metrics.

Using the right metrics for application workloads and machine-learning-based workload prediction, we can achieve a better HPA that optimizes resource utilization and application KPI. Our application-aware HPA intelligently correlates multiple application metrics and KPI targets to derive proper Kafka consumer capacity. ProphetStor’s Federator.ai provides machine-learning-based workload prediction and provides operation plans that adjust the number of replicas dynamically based on the workloads while maintaining the KPI target. We have shown that application-awareness is essential for the proper operation of the Kubernetes’ auto-scaling, and coupled with the understanding of the operating environment and dynamic resource adjustments, we can improve utilization and performance significantly.