Overview

One of the world’s most influential research and advisory firms, headquartered in Cambridge, MA. The NASDAQ listed company provides unique insights that is grounded in annual surveys of more than 675,000 consumers and business leaders worldwide, rigorous and objective methodologies, and the shared wisdom of their innovative clients.

As they grow their business, their IT operates with ten different DEV teams in diversified regions for various products and solutions. With a large environment and data center expansion through acquisition, their IT team has strived to understand how to meet the application SLA with minimized cost and enhanced performance. Furthermore, labor-intensive operational tasks consumed the team, leaving the questions of how to formulate the IT infrastructure to support the next phase of business expansion unanswered.

After several months of careful studies, they decided to adopt the containerized MultiCloud infrastructure solution with Kubernetes to automate labor-intensive tasks, scale as the business grows, and optimize their cloud spending. They found that they have heavy usages of NGINX, node.js, and Redis, among other services for the containerized applications. They adopted a microservices-based approach using containers and Kubernetes orchestration. Their developers are in the process of moving initial parts of its infrastructure and new services to Kubernetes. Administrators love the simplicity of setting up new clusters with a single command and managing and balancing workloads on thousands of containers at a time. They can also manage data access down to a fine-grained level, eliminating the need to set up duplicate machines for security purposes. Before they can put the solution to production, they need to resolve the pain points as follows.

Challenge: Manage Complex Operations at Scale

- How to solve the complexity problem? Containerized services are challenging to manage at scale, and performance depends on the underlying architecture’s health. That means system managers must still attend to the details of managing infrastructure.

- How to avoid waste from over-provisioned cloud resources for running its many application workloads? System managers are required to specify the workload requirements. Without a clear understanding of the container workloads and how the application’s containers correlate, most of the specifications are guesswork. Worse, IT teams are too busy to learn how to master a new platform.

- How to optimize the cost of supporting their applications in the cloud? The auto-scaler and scheduler in the standard Kubernetes distribution are reactive and fundamental, resulting in lower performance than expected.

Solution: Improving Performance with Fewer Costs

Federator.ai for OpenShift is a certified, trusted operator for OpenShift. It is installed in the deployed cluster to make moving to the MultiCloud environment a more enjoyable journey. The features that they found valuable include:

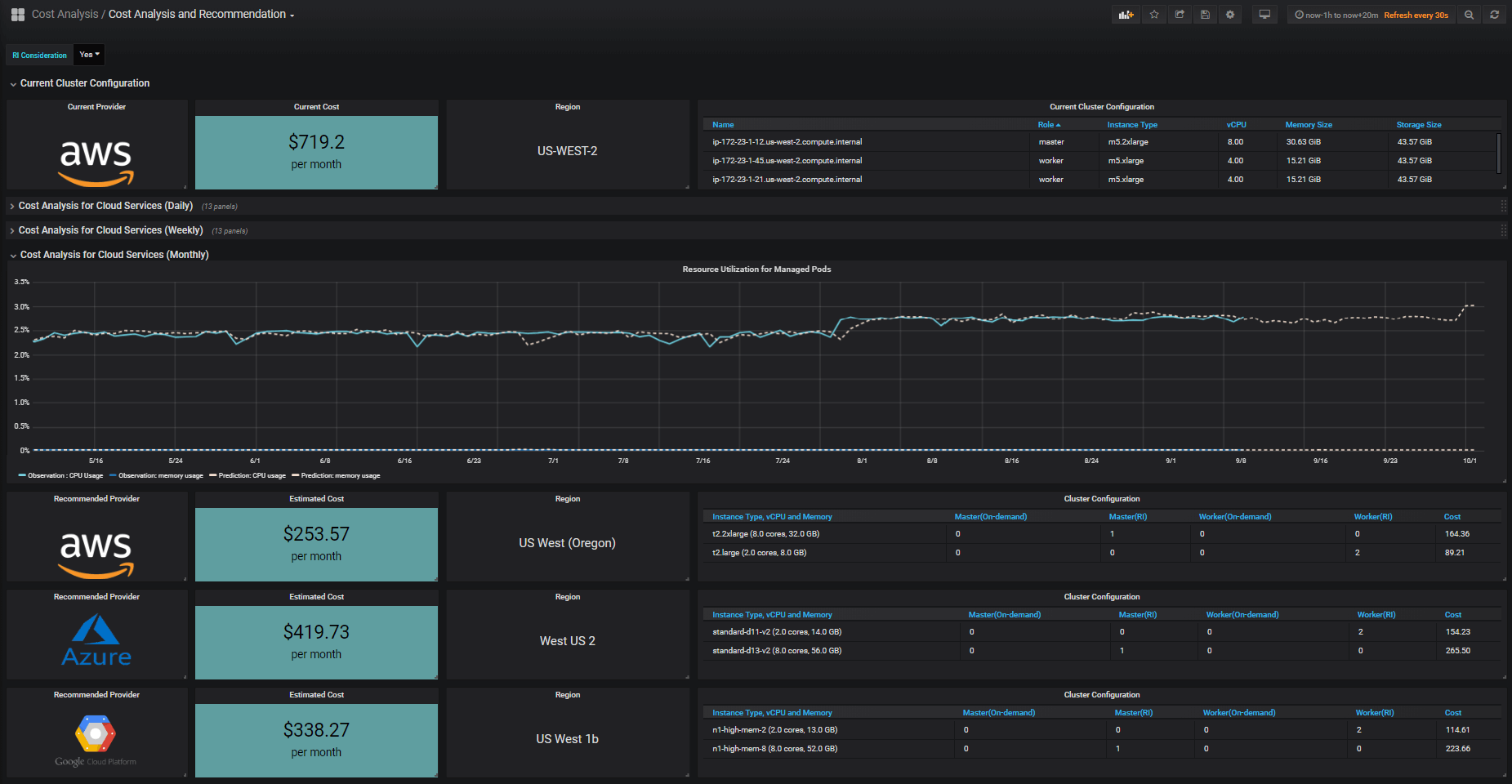

- Resource and cost optimization for each application: Federator.ai provides visibility of the cloud resources required for each application workload and determines the lowest-cost instances from different cloud providers supporting the application. The solution helps reduce the cost by up to 60%, compared to the over-provisioning cost plans adopted originally.

- Improved performance: Federator.ai provides a significantly better auto-scaling and workload management solution than the native auto-scaling mechanisms of Kubernetes. Federator.ai reduces application latency by up to 70% for a wide range of workloads. We compared with native HPA using the same NGINX workloads, and Federator.ai has shown to improve the average time per request from 101.47 ms to 16.78 ms, an 83.46% improvement! The result is a significant improvement considering that NGINX is the most popular networking/proxy solution in containerized applications.

The user can enjoy flexibility, scalability, cost, and performance in an integrated manner. Proactive management can handle the complexity of Kubernetes and bring peace of mind to the deployment. They can now expand the usage of cloud services with the benefits of container applications and the management platform.

The following dashboard shows what the customer can predict the container workload with the recommended setting to provide Just-in-time-Fitted container execution resources and even indicates the cloud services with the least costs. With ProphetStor’s Federator.ai, the company is delighted to enjoy the journey to moving to MultiCloud and be assured that the application performance and value of operations are optimized.

60%

Facilitate the optimal procurement of cloud instances with up to

60% of cost savings

70%

Reduces application latency by

up to 70% for workloads

60%

Facilitate the optimal procurement of cloud instances with up to

60% of cost savings

70%

Reduces application latency by

up to 70% for workloads