Introduction

Data centers today face unprecedented challenges from rapidly escalating computing demands, especially those driven by artificial intelligence (AI) and high-performance computing (HPC). These advanced workloads result in substantially higher power densities, significantly increasing operational costs and environmental impacts due to cooling inefficiencies. With data center energy consumption expected to reach approximately 2% of global electricity usage by 2025 and up to 21% globally by 2030 (AI models are devouring energy. Tools to reduce consumption are here, if data centers will adopt | Lincoln Laboratory), the adoption of advanced cooling technologies for AI data centers is no longer optional—it’s essential.

Google’s AI-Enabled Cooling approach, pioneered in collaboration with DeepMind, demonstrates significant improvements by leveraging sophisticated AI algorithms to optimize traditional air-based cooling systems autonomously. ProphetStor’s Smart Liquid Cooling advances this concept further by integrating AI-driven, application-aware resource management directly with advanced cooling technologies, such as direct liquid cooling (DLC). The transition from air cooling to liquid cooling is a necessary prerequisite to the adoption of the latest energy-hungry GPU models, like the NVIDIA GB200. ProphetStor is helping to maximize the benefits of liquid cooling by providing a Smart Liquid Cooling software platform that orchestrates liquid cooling across GPU clusters to lower PUE, increase fault tolerance, boost performance, lengthen GPU lifespans, and improve ESG reporting, while driving API standardization.

This whitepaper presents a detailed comparative analysis of Google’s air-cooling methodology with ProphetStor’s liquid cooling methodology, examining technical distinctions including application awareness, energy efficiency, and deployment complexity, as well as respective market positioning. This analysis will identify the unique advantages of both approaches and will show why ProphetStor’s approach to smart liquid cooling orchestration will make it easier and more economical to deploy and manage the complexity and energy demands of next-generation AI infrastructure.

Technical Breakdown

- Google’s AI Cooling Methodology: Google (with DeepMind) developed an AI system that autonomously controls data center cooling. A cloud-based AI agent gathers thousands of sensor readings every five minutes and feeds them to deep neural networks to predict the impact of various cooling adjustments (Safety-first AI for autonomous data centre cooling and industrial control | Google DeepMind). The AI then selects optimal actions (like adjusting cooling tower speeds, chillers, and fan speeds) to minimize energy use while respecting safety constraints. This is essentially a deep reinforcement learning approach: the AI “learns” the complex thermodynamics of the facility and continuously optimizes cooling in real-time. Google’s system focuses on traditional air-based cooling infrastructure (e.g., CRAC units, fans, water chillers), adjusting those parameters more intelligently than human operators could. By 2018, Google had moved from merely recommending settings to fully automating the cooling controls under human oversight. The AI’s sophistication lies in its predictive modeling (anticipating future temperatures and loads) and robust safety mechanisms – Google implemented at least eight safety layers (confidence estimations, two-tier verification, human override, etc.) to ensure reliability. In short, Google’s AI cooling is a highly specialized control system using deep learning to fine-tune cooling equipment operations.

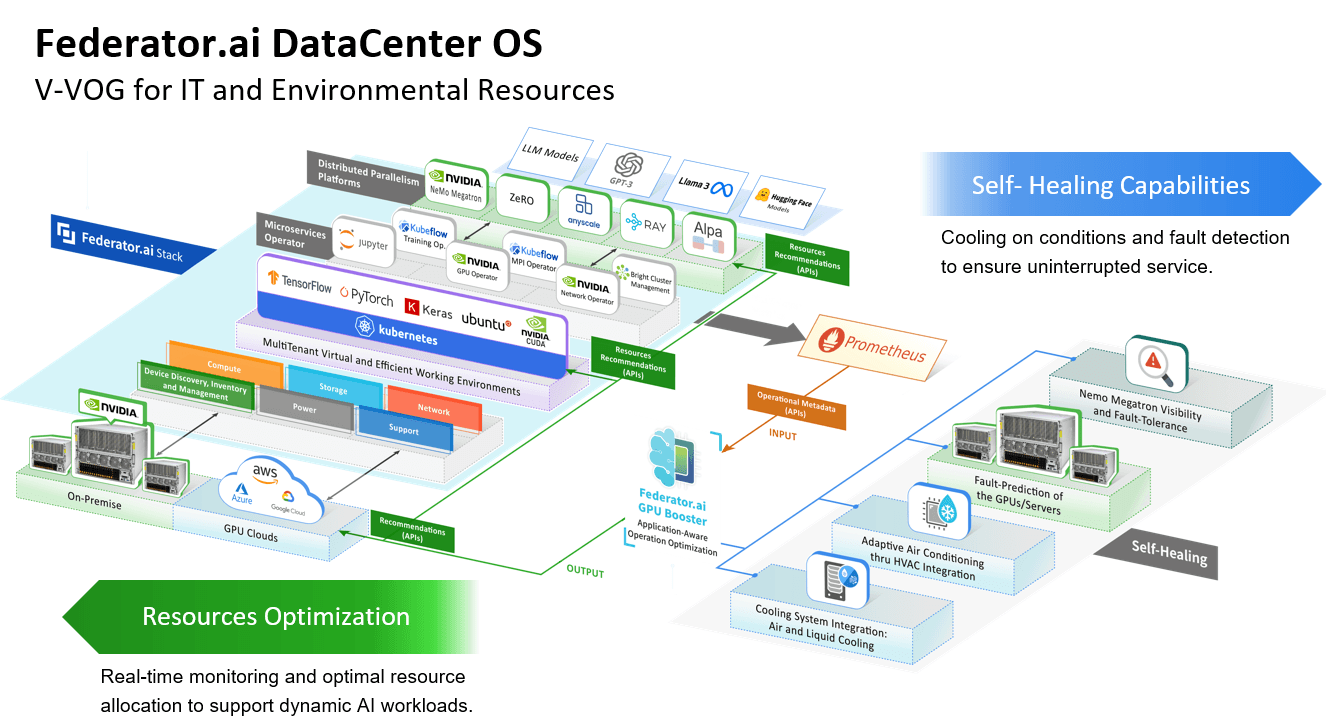

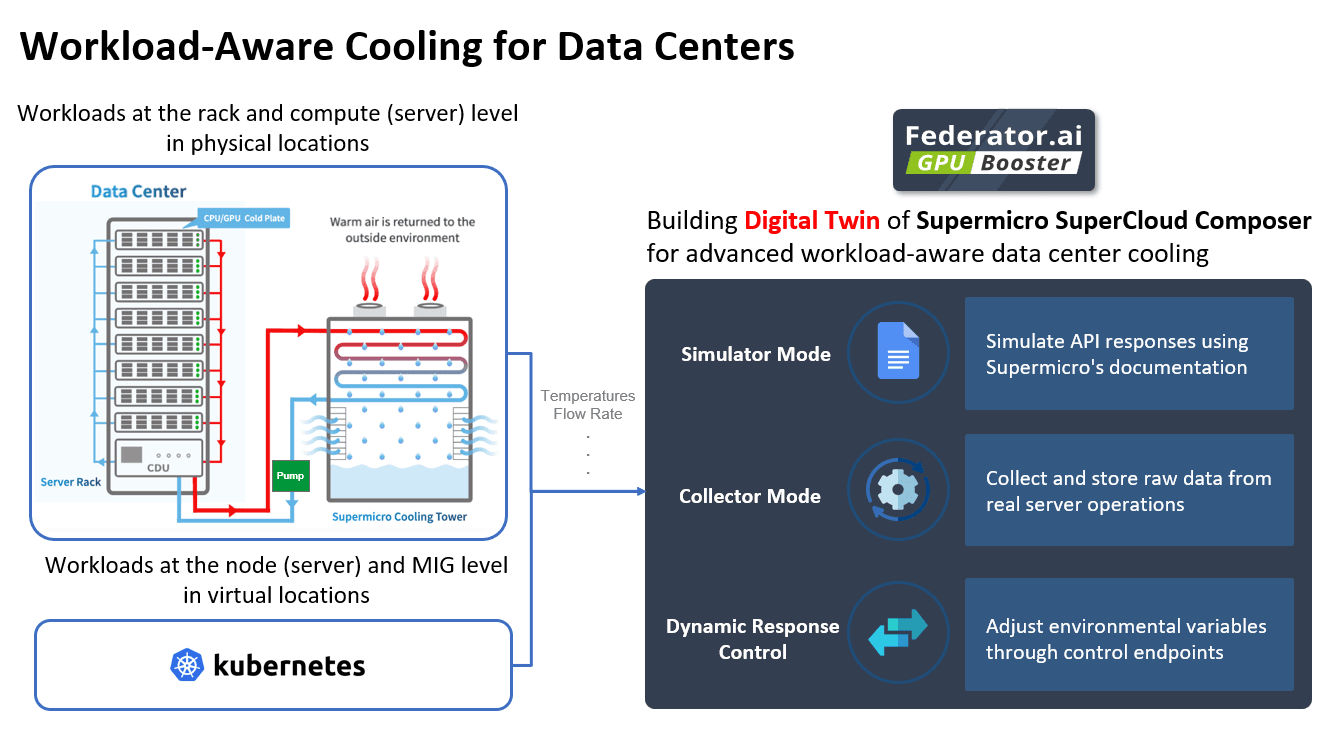

- ProphetStor’s Smart Liquid Cooling Methodology: ProphetStor’s approach, offered through its Federator.ai DataCenter OS platform, combines AI with multi-layer data correlation across both IT workloads and facility systems. Technically, ProphetStor uses machine learning algorithms that not only analyze environmental metrics (temperatures, power usage, etc.) but also ingest data from servers, GPUs, storage, and network devices (Innovative AI Solution for Data Centers | ProphetStor). This application-aware design means the AI understands what applications/workloads are running, their resource needs, and how they impact heat generation. ProphetStor’s solution employs predictive analytics (via its patented time-series analysis engine) to forecast future resource demand and cooling requirements, essentially performing prescriptive optimization for both compute and cooling resources (Federator.ai DataCenter OS for AI-Defined Data Center | ProphetStor). A distinguishing technical feature is support for advanced cooling hardware: ProphetStor can interface with direct liquid cooling (DLC) systems and other modern cooling setups. For example, it can adjust liquid flow or fan speeds in server liquid-cooling loops in concert with workload placement decisions. Whereas Google’s AI mainly tweaks cooling devices, ProphetStor’s AI might decide to spread a heavy workload across more servers to avoid hotspots, throttle non-critical tasks, or even hibernate idle servers to reduce heat, then adjust cooling accordingly (Green IT/ ESG | ProphetStor). In summary, ProphetStor’s technology is a holistic AI-driven orchestration system that coordinates IT resource allocation and cooling system adjustments together. It uses sophisticated algorithms to correlate multiple layers of data (application, virtualization, hardware, and environment) for smarter cooling decisions that traditional single-layer approaches can miss.

Application Awareness

A key differentiator between Google’s and ProphetStor’s solutions is application/workload awareness:

- Google – Environment-Focused Control: Google’s AI cooling treats the data center as a thermal environment to be controlled. It looks at metrics like temperatures, humidity, power draw, etc., and optimizes cooling purely based on those readings (Safety-first AI for autonomous data centre cooling and industrial control | Google DeepMind). The system is largely agnostic to what specific applications or workloads are running – it only sees their aggregate effect (heat output) on the environment. Google’s approach was incredibly effective at tweaking cooling infrastructure, but it operates with a “single layer” view (the facility layer). It does not explicitly incorporate knowledge of which servers could be idling or which jobs could be deferred to reduce heat. In fact, ProphetStor notes that Google’s method “uses a single layer of data and is unaware of the specific application workloads,” meaning it relies on general patterns and heuristics rather than workload-specific insights (Innovative AI Solution for Data Centers | ProphetStor). This lack of application awareness is by design – Google attacked the problem from the facilities side, assuming the IT load as a given input to cool as efficiently as possible.

- ProphetStor – Integrated IT & Environmental Optimization: ProphetStor’s solution is application-aware by design. It continuously correlates application behavior with energy and cooling demands. In practice, this means the AI knows, for example, when a batch of AI training jobs will ramp up GPU usage (and heat) or when certain servers can be hibernated. It predicts workload patterns (CPU/GPU utilization trends, job schedules, etc.) and preemptively adjusts cooling and allocates resources in tandem (Federator.ai DataCenter OS for AI-Defined Data Center | ProphetStor). Because it understands the “why” behind energy use (the workloads), ProphetStor’s system can make more nuanced decisions. For instance, instead of just increasing cooling when temperatures rise, it might redistribute workloads to prevent localized overheating or delay a non-urgent job to an off-peak time when cooling is more efficient. This dual awareness leads to more optimal outcomes – the cooling system isn’t working at odds with the IT load, but rather in concert with it. ProphetStor emphasizes that this multi-layer correlation (spanning applications to physical infrastructure) avoids the purely heuristic nature of simpler single-layer algorithms. The result is a smarter cooling strategy that optimizes computing performance while maintaining effective thermal management. In short, Google’s AI adjusts the cooling to the IT load, whereas ProphetStor’s AI can adjust the IT load and the cooling together to achieve the best overall efficiency.

Efficiency Metrics

Both solutions have demonstrated impressive improvements in energy efficiency, though measured in slightly different ways:

- Google’s Energy Savings: Google reported that its machine learning controls cut the energy used for cooling by up to 40% – a massive reduction (DeepMind: Google’s AI saves the amount of electricity used in data centres | WIRED). In terms of overall efficiency, this translated to about a 15% improvement in PUE (Power Usage Effectiveness) at the data centers where it was deployed (Google uses artificial intelligence to boost data center efficiency | Utility Dive). (For context, a 15% PUE improvement means the facility as a whole became 15% more energy-efficient, since cooling overhead dropped significantly.) In later iterations, after deploying the AI broadly, Google noted the system stably delivered around 30% average cooling energy savings in multiple data centers, with potential to improve further as the algorithms learned over time (Safety-first AI for autonomous data centre cooling and industrial control – Google DeepMind). These gains are enormous in an industry where single-digit percentage improvements are notable. Importantly, reducing cooling energy directly lowers carbon footprint as well – Google’s team framed the achievement as a “phenomenal step forward” for efficiency with significant emissions reduction. The AI also maintained or improved overall thermal conditions, meaning efficiency was gained without sacrificing reliability. In sum, Google’s metrics (40% cooling energy cut, 15% total energy cut) set a high benchmark for AI in facilities.

- ProphetStor’s Efficiency Gains: ProphetStor delivers similarly strong improvements and does so with a broader focus on IT and thermal efficiency. According to ProphetStor, data centers using its Federator.ai platform can save up to 50% on energy costs overall (Green IT/ ESG | ProphetStor). This figure isn’t limited to cooling alone; it includes savings from rightsizing compute resources (hibernating unused servers, avoiding over-provisioning) as well as smarter cooling. By precisely aligning power and cooling supply with the actual needs of applications, waste is minimized across the board (Innovative AI Solution for Data Centers | ProphetStor). For example, if ProphetStor’s AI sees that 20% of servers are running trivial workloads, it can consolidate those tasks and rebalance the workload across servers – cutting electricity demand for both compute and cooling for those machines. In essence, ProphetStor aims to improve the total energy efficiency of the data center and not just the cooling plant achieve a significantly larger combined effect. A case study at a GPU-intensive supercomputing center showed that applying ProphetStor’s AI led to a 60% increase in utilization of GPU resources (meaning much less idle time) (Case Study: Data Center Utilization up 60% | ProphetStor). Higher utilization means more useful work per unit of energy, indirectly improving energy efficiency of the facility. Additionally, ProphetStor’s focus on liquid cooling integration means it leverages the inherent efficiency of liquid cooling technology. Liquid cooling can remove heat more efficiently than air, enabling higher performance per watt and even reducing the power needed for cooling fans, etc. (studies note that targeting heat at the source with liquid often reduces overall energy consumption vs. air cooling) (Unlocking ROI with advanced data center liquid cooling technologies | DCD). We can infer that ProphetStor’s approach yields PUE improvements comparable to Google’s or better in scenarios with lots of idle equipment (since making more optimized use of idle servers dramatically cuts waste). Finally, on environmental impact: both solutions contribute to carbon reduction. ProphetStor explicitly ties into ESG goals – by cutting energy use, data centers reduce emissions and even monetize carbon savings (e.g. selling saved energy or carbon credits in regions with carbon pricing) (Innovative AI Solution for Data Centers | ProphetStor). Google’s 40% cooling savings similarly translate to thousands of tons of CO₂ avoided.

In short, Google drives greater cooling efficiency by focusing on the thermal environment whereas ProphetStor focuses on both compute and cooling, an IT/OT orchestration approach that has been demonstrated to cut overall energy costs by half.

Implementation Complexity

Implementing these AI cooling solutions involves different levels of complexity and effort:

- Google’s In-House Deployment: Google’s AI cooling system was a bespoke solution, tightly integrated into Google’s own infrastructure. Deploying it required instrumenting data centers with a vast sensor network and interfacing the AI with existing control systems. The AI monitors “over 100 variables” in the cooling systems (temperatures, pump speeds, fan speeds, valve positions, etc.) (Google uses artificial intelligence to boost data center efficiency | Utility Dive) – indicating how complex a modern data center HVAC plant is. Building an AI to juggle all those parameters meant Google’s engineers had to invest significant time in data collection, model training, and simulation before going live. Even after development, Google prioritized safety and reliability: they implemented multiple fail-safes, like two-layer verification of AI decisions (the cloud AI’s recommended actions are checked against rules by on-site controllers before execution) and continuous uncertainty checks (the AI only acts when it has high confidence in a beneficial outcome) (Safety-first AI for autonomous data centre cooling and industrial control | Google DeepMind). Operators can override the AI at any time, and the system will smoothly hand back control if needed. All this adds complexity but was necessary to trust the AI with critical cooling operations. In practice, Google’s rollout involved gradually ramping up autonomy (starting with recommendation mode in 2016, to full autonomous control by 2018). Each data center had to be tuned and the AI model continuously retrained with new data. This kind of project requires a team of data scientists and reliability engineers – a resource commitment feasible for Google, but not for everyone. However, once implemented, the maintenance is relatively low: the AI runs in Google’s cloud, self-adjusting as it learns, and operators just supervise. The system is scalable across Google’s fleet of data centers via cloud deployment, but it’s essentially custom-built for Google’s environments (it’s not an off-the-shelf product). Thus, the complexity of implementation is high, but Google absorbed it internally.

- ProphetStor’s Deployment Model: ProphetStor’s solution is an integrated and open infrastructure-agnostic platform for other data center operators. This generally makes implementation easier for users compared to DIY solutions. Federator.ai can hook into data center metrics by integrating with popular monitoring systems (e.g. Prometheus, Datadog) that likely already exist. ProphetStor leverages APIs and existing telemetry instead of requiring a whole new sensor network (while additional environmental sensors can be beneficial, the ability of ProphetStor to ingest data from existing OT infrastructure like power meters and server sensors makes deployment easier). ProphetStor’s platform is built to be infrastructure-agnostic and interoperable, capable of supporting a variety of hardware (CPUs, GPUs, different server brands) and cooling setups out of the box (Federator.ai DataCenter OS for AI-Defined Data Center | ProphetStor). For example, if one data center has modern liquid-cooled racks, Federator.ai can interface with that data center’s cooling system’s controllers; if another has traditional CRAC units, Federator.ai can interface via those controls as well. ProphetStor emphasizes “comprehensive integration capabilities,” indicating that ProphetStor has done the work to make connectors for common data center equipment and management software. Federator.ai DataCenter OS platform can be deployed on-premises or in the cloud, from where it can be pointed to the necessary data sources and manage liquid cooling optimization policies. This is far simpler than creating a bespoke solution for each unique deployment. Maintenance of Federator.ai DataCenter OS is handled through automatic platform updates – the user doesn’t need to worry about applying patches and retraining models. Instead, ProphetStor uses a standalone approach to make platform updates transparent to the user. Data centers with fully automated cooling and software-defined infrastructure will benefit from the ability to “plug and play” Federator.ai DataCenter OS directly into existing infrastructure management control frameworks. In legacy environments, there may be some integration work required to allow the ProphetStor software to send commands to control cooling units and orchestrate compute workloads. Still, the heavy lift of developing AI/ML models, correlation engines, and supporting functionality is done by ProphetStor, allowing operations team to deploy and manage Federator.ai DataCenterOS without needing deeply specialized infrastructure skills. In terms of scalability, the platform is built to scale from single racks to whole facilities and even across multiple data centers. In fact, the concept of orchestrating resources across multiple data centers was the inspiration behind the “Federator.ai” name and is core to ProphetStor’s vision of enabling a global marketplace of virtual compute capacity that can be traded between data centers via an “AboveCloud” orchestrator. (Federator.ai DataCenter OS for AI-Defined Data Center | ProphetStor). Such scalability ensures that an initial implementation can seamlessly grow to accommodate the growth of a customer’s data center infrastructure. ProphetStor also strives to shield customers from hardware complexity inherent in environments using newer liquid cooling technology. Implementing the physical infrastructure of liquid cooling introduces non-trivial challenges such as plumbing, coolant management and leak detection, which are generally more complex than the challenges involved in implementing standard air cooling (Unlocking ROI with advanced data center liquid cooling technologies | DCD). Federator.ai DataCenter OS virtualizes the liquid cooling hardware layer, making management and integration easier and future-proofing data center hardware investments as new technologies are introduced.

In summary, while Google’s solution was complex to develop and integrate and essentially “hardcoded” to bespoke hardware configurations,eto , ProphetStor’s solution is designed to minimize complexity by providing a flexible, unified AIOps platform thatis easier to deploy and maintain for a typical operator, reducing the skills, time and cost needed to support the modern AI data center.

Market Positioning & Adoption

The two solutions occupy different positions in the market and have seen different adoption trajectories:

- Google’s AI Cooling (Market Position): Google designed its cooling solution as an internal innovation, and was successful in showcasing what’s possible when AI is applied to data center operations at scale. Google has implemented its AI cooling control in multiple of its own data centers worldwide (Safety-first AI for autonomous data centre cooling and industrial control | Google DeepMind), making its already-efficient facilities even more efficient. However, Google did not commercialize this specific technology for external customers (at least not directly). Instead, the impact on the market has been through inspiration and knowledge-sharing. Google’s published results (40% cooling energy savings) garnered significant attention in the data center industry, and established Google as a leader in green AI operations (Google uses artificial intelligence to boost data center efficiency | Utility Dive). Many other large operators and vendors took note – for instance, some former Google/DeepMind engineers founded a startup (Phaidra) to bring similar AI cooling tech to other industries (Alums From Google’s DeepMind Want to Bring AI Energy Controls to Industrial Giants | DataCenter Knowledge). Companies like VMware, Schneider Electric, and Johnson Controls have also been developing AI-driven data center management features, clearly influenced by the pioneering work that Google did. Google’s AI cooling isn’t something you can buy; it’s a competitive advantage Google uses internally and a reference model for the industry. Adoption beyond Google is indirect in the sense that others have tried to replicate the approach. Today, most hyperscale cloud companies (Microsoft, Amazon, etc.) have their own efficiency initiatives (though most have been quieter when it comes to publishing implementation details). Google’s early adoption of AI-driven cooling was successful at proving the concept at scale, and the lessons learned are now finding broader adoption through third-party solutions and open research. Google’s research publications and blog posts have guided industry best practices, and many data center operators now expect AI to play a role in efficiency, partly due to Google’s success.

- ProphetStor’s Smart Cooling (Market Position): ProphetStor positions itself as an enabler for any data center (enterprise, cloud, HPC, etc.) to achieve “Google-like” optimization. It brands Federator.ai as an “AI-Defined Data Center OS” that can deliver efficiency, sustainability, and automation to operators who don’t have in-house AI teams (Federator.ai DataCenter OS for AI-Defined Data Center | ProphetStor). ProphetStor is a smaller, specialized player in the AIOps (AI for IT operations) market. Its niche focus on GPU-intensive environments and liquid cooling makes it particularly attractive for high-performance computing centers and AI cloud providers. In terms of adoption, ProphetStor has reported some notable deployments. A national supercomputing center in Taiwan, for instance, deployed Federator.ai to optimize its GPU cluster, resulting in 60% higher utilization and plans to expand Federator.ai across all its clouds (Case Study: Data Center Utilization up 60% | ProphetStor). This customer success story serves as a testament to ProphetStor’s pioneering work on IT/OT convergence in the AI data center, and the subsequent benefits of improved IT and cooling efficiency that include lower PUE, increased fault tolerance, higher workload performance, longer GPU lifespans, and greater ability to meet ESG targets.

Cost Considerations

When evaluating cost and ROI, there are differences in perspective between Google’s self-use case and ProphetStor’s product offering:

- Google – Cost & ROI: Google’s motivation for its AI cooling project was both environmental and economic. By cutting 30-40% of cooling energy, Google saved a tremendous amount on electricity bills. Cooling can account for a significant fraction of a data center’s power usage (often 30-40% in inefficient facilities, less in optimized ones), so a 40% reduction in cooling energy could yield around 10-15% savings in total data center power costs (Google uses artificial intelligence to boost data center efficiency | Utility Dive). At Google’s scale, that translates to millions of dollars saved per year in each large data center. The breakeven on this investment likely came very fast – the main cost was the R&D effort and computing resources to run the AI. Considering Google already had the talent (DeepMind) and infrastructure, the incremental cost was moderate, and it paid off through lower ongoing OpEx. Additionally, improved PUE means Google can do more computing work for the same power budget, potentially delaying expensive capacity upgrades or new construction. In terms of total cost of ownership, the AI system likely reduced wear on cooling equipment too (by running things optimally rather than with manual conservative margins, equipment might cycle less or operate in efficient regimes). We should note that Google’s solution was not monetized; it’s an internal investment. But if one were to imagine its value, it’s been described as shaving “huge chunks off the company’s electricity bill” (Alums From Google’s DeepMind Want to Bring AI Energy Controls to Industrial Giants | DataCenter Knowledge) – a clear cost win. For Google, there’s also the reputational and environmental value in cutting energy use to help meet carbon-reduction goals. In summary, while Google’s AI cooling had upfront development costs, the energy cost savings (40% of cooling costs) delivered a strong ROI. The total cost of ownership of their data centers improved via lower energy spend and better reliability that minimizes downtime costs which can be very high).

- ProphetStor – Cost & ROI: ProphetStor’s solution is marketed on the promise of cost savings and quick payback. The headline figure of up to 30% energy cost reduction (Green IT/ ESG | ProphetStor). This is an innovative way to view ROI – not just saving cost, but turning efficiency into a revenue or credit stream. In terms of total cost of ownership, using ProphetStor’s AI might incur some overhead (software running on servers, etc.), but that is negligible compared to the savings. Maintenance of the software is outsourced (as part of the product support), so the operator doesn’t need to hire a data science team – saving on labor costs as well.

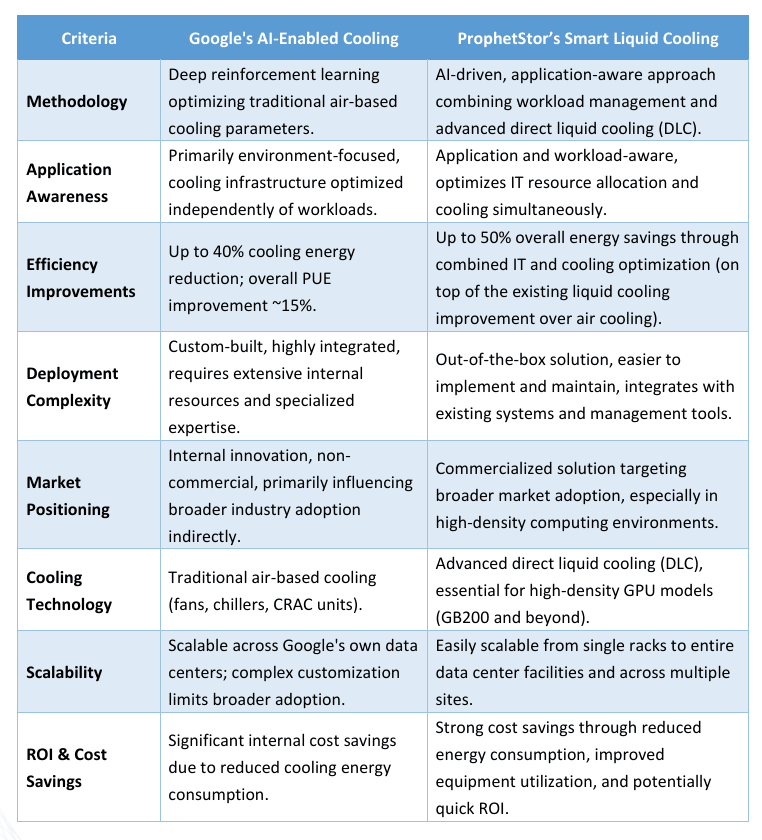

Criteria

Google's AI-Enabled Cooling

ProphetStor’s Smart Liquid Cooling

Methodology

Deep reinforcement learning optimizing traditional air-based cooling parameters.

AI-driven, application-aware approach combining workload management and advanced direct liquid cooling (DLC).

Application Awareness

Primarily environment-focused, cooling infrastructure optimized independently of workloads.

Application and workload-aware, optimizes IT resource allocation and cooling simultaneously.

Efficiency Improvements

Up to 40% cooling energy reduction; overall PUE improvement ~15%.

Up to 50% overall energy savings through combined IT and cooling optimization (on top of the existing liquid cooling improvement over air cooling).

Deployment Complexity

Custom-built, highly integrated, requires extensive internal resources and specialized expertise.

Out-of-the-box solution, easier to implement and maintain, integrates with existing systems and management tools.

Market Positioning

Internal innovation, non-commercial, primarily influencing broader industry adoption indirectly.

Commercialized solution targeting broader market adoption, especially in high-density computing environments.

Cooling Technology

Traditional air-based cooling (fans, chillers, CRAC units).

Advanced direct liquid cooling (DLC), essential for high-density GPU models (GB200 and beyond).

Scalability

Scalable across Google’s own data centers; complex customization limits broader adoption.

Easily scalable from single racks to entire data center facilities and across multiple sites.

ROI & Cost Savings

Strong cost savings through reduced energy consumption, improved equipment utilization, and potentially quick ROI.

This table provides a structured overview of both approaches, highlighting their distinct strategies, efficiency impacts, and market positioning.

Both Google’s AI-enabled cooling and ProphetStor’s Smart Liquid Cooling effectively demonstrate how artificial intelligence can substantially enhance data center energy efficiency. Google has set a benchmark by employing deep reinforcement learning to optimize existing cooling infrastructures, achieving remarkable reductions in energy use and demonstrating significant improvements in overall operational efficiency. In contrast, ProphetStor differentiates itself by integrating comprehensive, application-aware AI capabilities with advanced liquid cooling technologies, offering broader operational optimization that synchronizes compute workloads and cooling demands. This holistic approach not only achieves significant energy savings but also maximizes resource utilization, aligns with sustainability goals, and simplifies implementation for a broader range of data center operators. Ultimately, as data centers increasingly adopt advanced cooling technologies to manage the thermal challenges posed by AI and HPC workloads, solutions such as ProphetStor’s, which bridge intelligent resource management and sophisticated cooling technology, will likely become pivotal in defining the future landscape of efficient and sustainable data center operations.

References

- Evans and J. Gao, “DeepMind AI Reduces Google Data Centre Cooling Bill by 40%,” Google DeepMind Blog, Jul. 2016. Available: https://deepmind.google/discover/blog/deepmind-ai-reduces-google-data-centre-cooling-bill-by-40/.

- Gamble and J. Gao, “Safety-first AI for Autonomous Data Center Cooling and Industrial Control,” DeepMind Blog, Aug. 2018. Available: https://deepmind.google/discover/blog/safety-first-ai-for-autonomous-data-centre-cooling-and-industrial-control/.

- Walton, “Google uses artificial intelligence to boost data center efficiency” Utility Dive, Jul. 2016. Available: https://www.utilitydive.com/news/google-uses-artificial-intelligence-to-boost-data-center-efficiency/423086/.

- Foy, “AI models are devouring energy. Tools to reduce consumption are here, if data centers will adopt,” MIT Lincoln Laboratory, Sep. 22, 2023. Available: https://www.ll.mit.edu/news/ai-models-are-devouring-energy-tools-reduce-consumption-are-here-if-data-centers-will-adopt.

- Burgess, “Unlocking ROI with advanced data center liquid cooling technologies”, DCD. Jan. 2025. Available: https://www.wired.com/story/google-deepmind-data-centres-efficiency/.

- Swaminathan, “Google’s DeepMind trains AI to cut its energy bills by 40%”, WIRED. Jul. 2016. Available: https://www.datacenterdynamics.com/en/opinions/unlocking-roi-with-advanced-data-center-liquid-cooling-technologies/.

- Bloomberg News, “Alums From Google’s DeepMind Want to Bring AI Energy Controls to Industrial Giants”, DataCenter Knowledge, Jul. 2022. Available: https://www.datacenterknowledge.com/sustainability/alums-from-google-s-deepmind-want-to-bring-ai-energy-controls-to-industrial-giants.

- ProphetStor, “AI-Defined Data Center: Federator.ai DataCenter OS for Optimal Efficiency, Sustainability, Automation, and Global Compute Platform Integration,” ProphetStor, 2023. Available: https://prophetstor.com/white-papers/federator-ai-datacenter-os-for-addc/.

- ProphetStor, “A GPU-based Supercomputer Sees 60% Increase in Utilization with Federator.ai,” ProphetStor, Case Study. Available: https://prophetstor.com/white-papers/federator-ai-datacenter-os-for-addc/.

- ProphetStor, “Green IT/ ESG,” ProphetStor Solutions. Available: https://prophetstor.com/green-it-esg/.

- ProphetStpor, “ProphetStor’s AI-Enabled Energy Efficiency and Planning Solution for Modern Data Centers,” ProphetStor Whitepaper, 2023. Available: https://prophetstor.com/white-papers/innovative-ai-solution-for-data-centers/.

- ProphetStor, “Federator.ai GPU Booster®,” ProphetStor Product Introduction. Available: https://prophetstor.com/federator-ai-gpu-booster/.

- ProphetStor, “METHOD FOR ESTABLISHING SYSTEM RESOURCE REQUIREMENT PREDICTION AND RESOURCE MANAGEMENT MODEL THROUGH MULTI-LAYER CORRELATIONS,” U.S. Patent No. 11,579,933 B2, granted 2020. [Online]. Available: https://patents.google.com/patent/US11579933B2/en?oq=16%2f853%2c997.

- ProphetStor, “METHOD AND SYSTEM FOR OPTIMIZING COOLING RESOURCES IN A DATA CENTER BASEDON WORKLOAD PREDICTION,” U.S. Patent Pending.